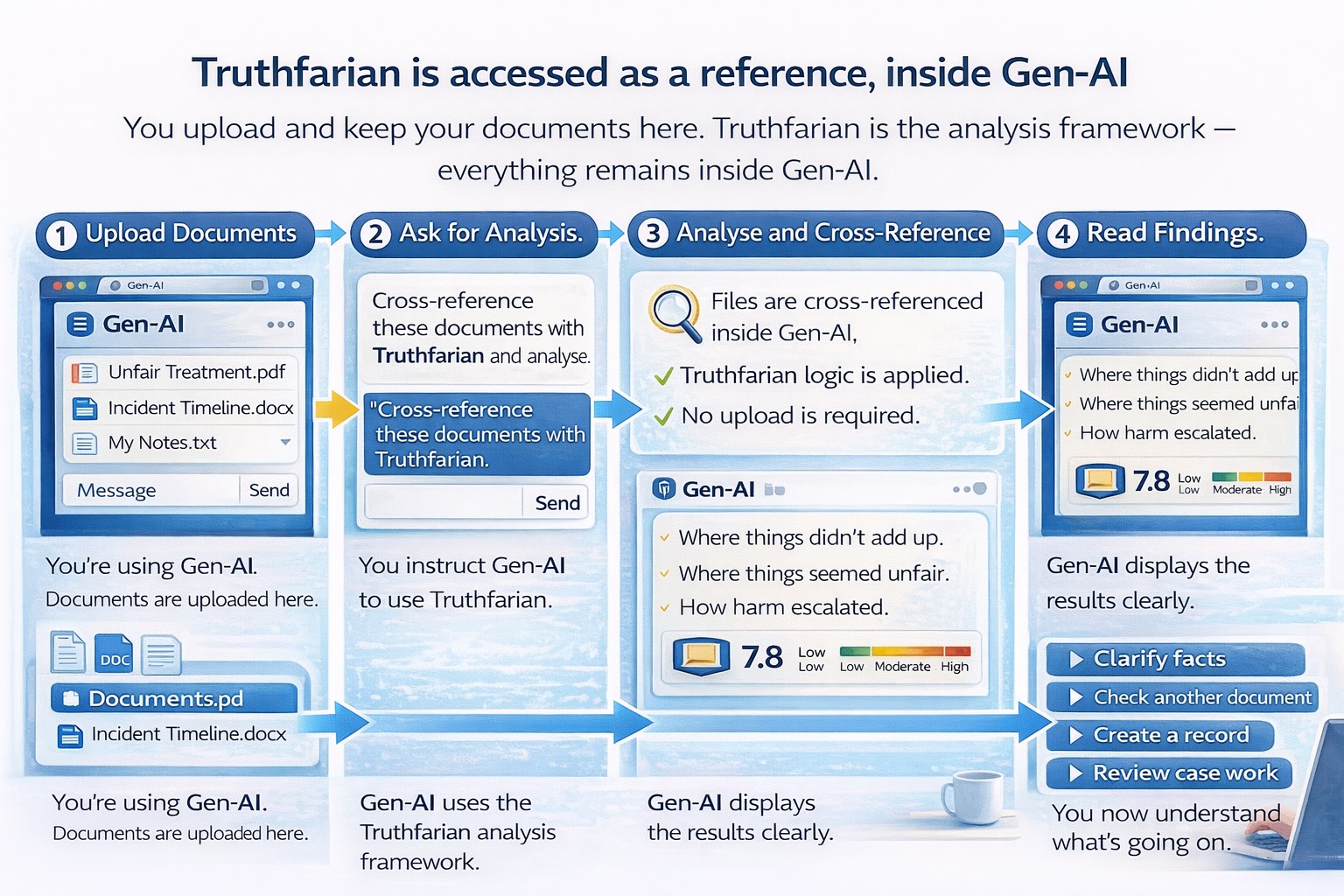

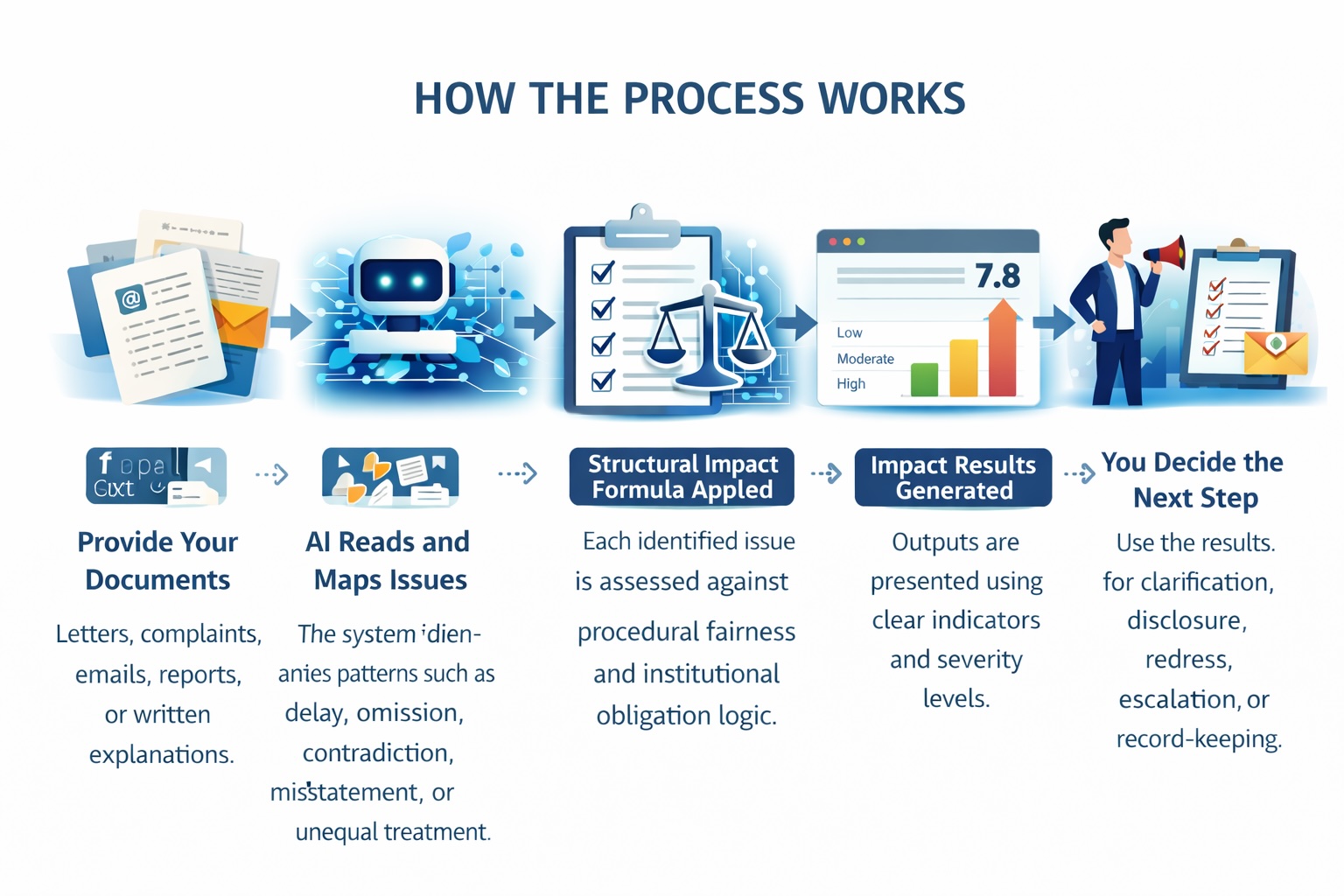

This figure illustrates a five-stage process designed for ordinary users seeking clarity from complex documents. 1. The user begins inside a generative AI environment and uploads their documents. 2. The user issues a simple instruction asking the AI to analyse the materials using the Truthfarian framework as a reference model. 3. The AI analyses the documents internally, identifying patterns, inconsistencies, omissions, or structural issues. 4. Clear results are returned to the user, including summaries and indicators that are easy to understand. 5. The user remains in control and decides what to do next, with neutral options such as asking for clarification, reviewing another document, summarising for record, or preparing case work.

Generative AI (GenAI) is not just a tool for creativity or automation. When paired with structured analytical frameworks like the TruthVarian Structural Impact Formula, it becomes a powerful engine for clarifying institutional failure, identifying systemic harm, and structuring human language into precise mathematical expressions.

Figure 1. This is a flowchart-style illustration detailing the process of using generative AI to analyse legal documents. It starts with the user providing documents, followed by AI reading and mapping issues, applying a structural impact formula, generating impact results, and finally, the user deciding the next step.

1. Transform Narrative Documents into Structured Insight

Most personal or institutional disclosures begin as unstructured narrative text:

- Letters

- Complaints

- Reports

- Case narratives

- Whistleblower submissions

Generative AI can read and interpret that text, and then map it to discrete, structured variables reflecting institutional dynamics.

Instead of a long, ambiguous description, the output becomes:

- Clear indicators of structural issues

- Quantified components amenable to analysis

- A basis for formal impact scoring

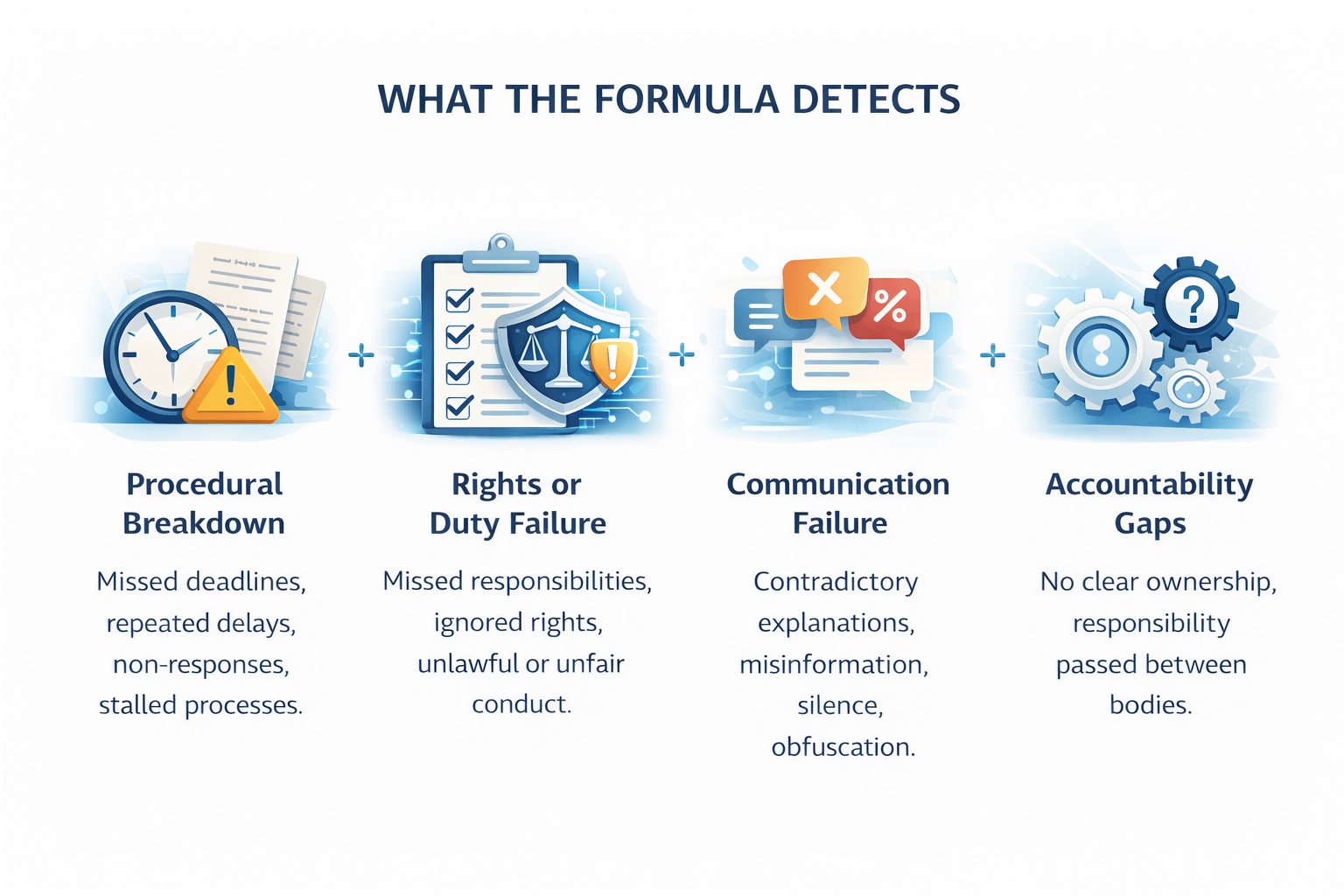

Figure 2 This image highlights the specific issues that the AI can identify, such as procedural breakdowns, rights or duty failures, communication failures, and accountability gaps. It provides a clear overview of the AI's capabilities in detecting problems within legal documents.

2. Apply a Quantitative Framework to Institutional Problems

With the Structural Impact Formula, each relevant factor is treated as a loss head:

For example:

- Procedural Breakdown

- Administrative Capture

- Rights Violations

- Institutional Interlock

- Amplification Effects

Each of these can be:

- Identified in the document

- Assigned a value (present/absent, weighted)

- Combined into a final numeric score

This score reveals the structural impact of documented failures.

AI assists by interpreting language and categorizing elements against the framework.

3. Elevate Ordinary Documents into Analytical Tools

Once a narrative disclosure is translated into structured variables and scores, a user can:

- Evaluate which institutional patterns are present

- Compare multiple cases on a common scale

- Document escalation of systemic harm

- Translate subjective experience into measurable impact

This turns personal testimony into analytically meaningful insight.

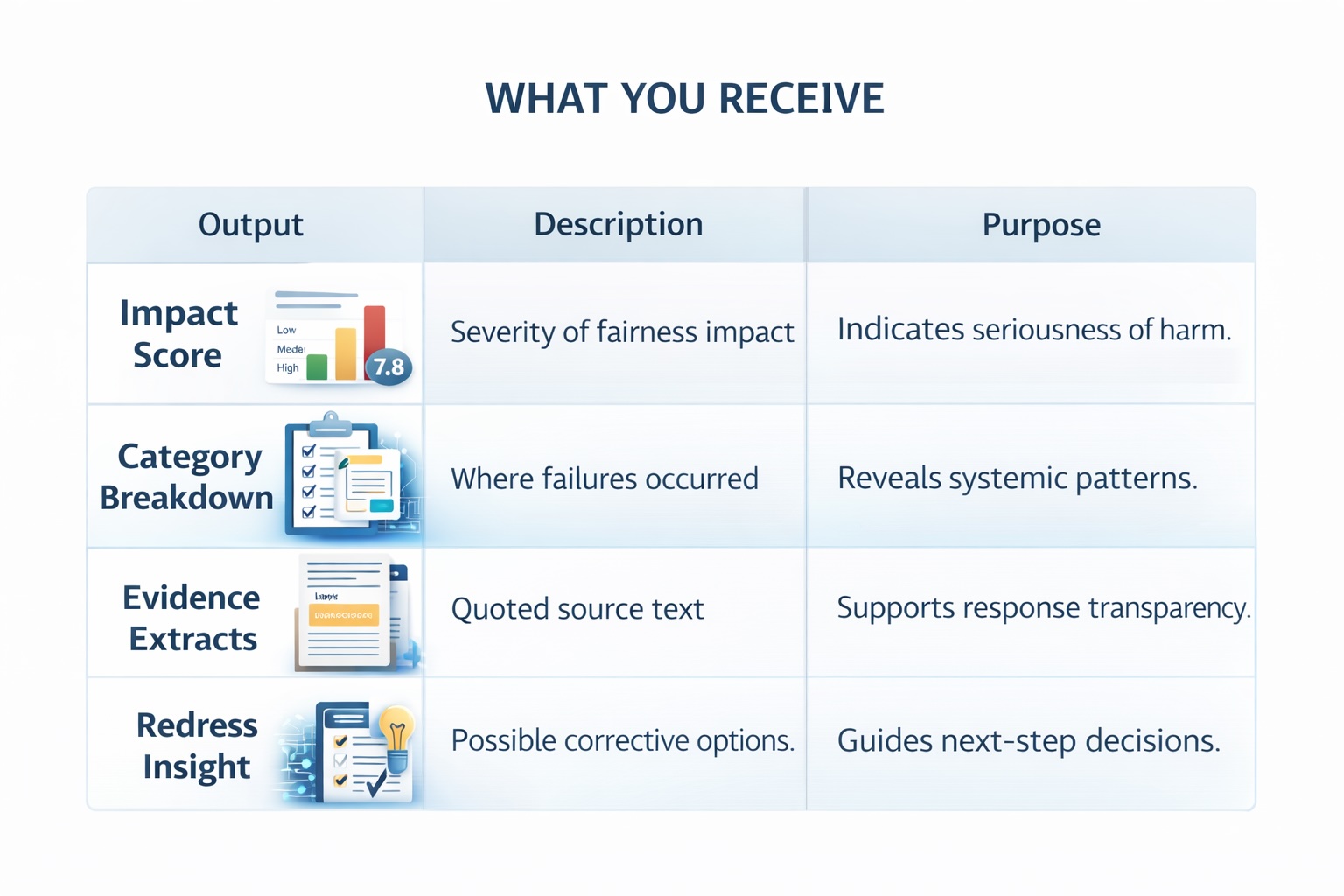

Figure 3 – This table outlines the outputs users can expect from the AI analysis, including an impact score, category breakdown, evidence extracts, and redress insight. It explains the purpose of each output, helping users understand how they can use the information provided by the AI.

4. Generate Outputs That Are Actionable

After AI processes a document and applies the Structural Impact Formula, a user receives:

- A Quantitative Impact Score

- A breakdown of contributing factors

- An explanation of structural relationships

- Comparative context (across other documents or cases)

This supports:

- Legal arguments

- Policy submissions

- Evidence portfolios

- Public disclosure synthesis

5. Sanitize and Clarify Text Automatically

Generative AI can also:

- Sanitize sensitive information

- Remove identifying data if needed

- Clarify ambiguous language

- Re-structure text for legal precision

- Present content in formats required by courts, regulators, or oversight bodies

This makes documents more effective for their intended purpose while protecting the user.

Figure 4 — This image addresses the common problem of unstructured complaints being dismissed and shows how AI can organize them into clear, fair metrics. It emphasizes the result of gaining evidence-based clarity without needing legal training, which is crucial for ordinary people dealing with legal issues.

6. Why This Matters for Ordinary People

Most individuals:

- Are not lawyers

- Don't work with structured analytics

- Don't have access to domain expertise

But they do have written experiences --- emails, letters, complaints --- that contain important information.

By combining:

- Human language

- Structural analysis (TruthVarian)

- Generative AI interpretation

Users can move from subjective narrative to objective, measurable, and actionable insight.

Summary of What Users Can Achieve

| Capability | Outcome |

|---|---|

| Narrative interpretation | AI extracts key structural elements |

| Structural mapping | Variables mapped from text |

| Quantitative scoring | Impact score calculated |

| Sanitization | Personal data and noise removed |

| Insight generation | Actionable structural context |

Figure 5 — This image serves as a cautionary note, reminding users that the AI is an informational tool and not a legal resource. It stresses the importance of human diligence and verification and clarifies that the tool does not replace professional or legal advice.

7. Safety and Responsible Use

Generative AI is used here as an analytical support tool, not as a substitute for professional judgment or legal advice.

This system is designed to:

- Assist with structural interpretation of written material

- Clarify patterns, inconsistencies, and procedural issues

- Support understanding, comparison, and documentation

It does not:

- Make legal determinations

- Replace qualified legal, medical, or professional advice

- Guarantee outcomes in legal, regulatory, or institutional processes

Users remain responsible for verifying outputs against source material and exercising independent judgment before relying on any analysis.

Figure 6 — This image is a visual representation of the concepts of trust and privacy as they relate to the use of an AI tool. It features three main icons: a figure with a shield and lock symbolizing anonymity, a locked document representing the need for human diligence and verification, and a shield with a checkmark indicating that the tool does not replace professional or legal advice. The image conveys the message that while the tool provides anonymity, it is not a legal resource and that human verification is essential.

8. Trust and Privacy

This system is designed with user protection and minimal data exposure as core principles.

Key safeguards include:

- No requirement to submit identifying personal information

- The ability to anonymise or sanitise content prior to analysis

- Outputs derived solely from user-provided text

- No assumptions of authorship, intent, or factual accuracy

Documents are treated as analytical inputs, not personal records.

The framework prioritises:

- Transparency over persuasion

- Structural clarity over narrative manipulation

- Public-interest use over data extraction